Recently I came across an interesting article by Jordi Cabot, in which he shares a tool vendor’s experience of high customer acquisition cost for MDD tools. In my opinion the main reason for this is that MDE is still not a mainstream methodology and hence benefits of MDD tools (and what it takes to reach those benefits) are often not clear to customers. This an issue I’ve had to deal with in all MDE-projects and currently in the CHARTER project, where Luminis is involved in industrial evaluation of an MDE tool-chain. In this post I would like to look at the problem from the customer perspective.

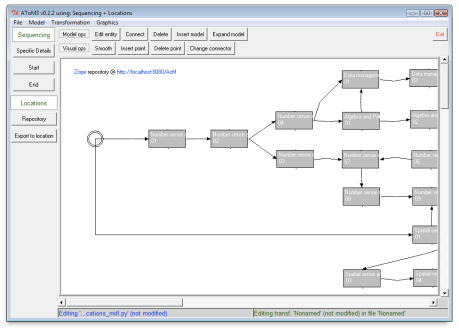

Figure 1: Customer processes and MDE tools

Given a large number and diversity of MDE tools (actually too many, one may argue), it is impractical to list added value cited by their vendors. Moreover, added value is per definition subjective to customer’s opinion. Instead I would like to step back and place these tools in customer’s context. For this I will use a simple classification model that partitions all customer processes and tools supporting them in four quadrants (see the figure). There are two dimensions: uniqueness (core/context) and technology (problem/solution). Core represents unique processes that are specific to a customer, which is in contrast to generic processes in Context. Customer’s business processes are found in category Problem. Solution is reserved for IT or Engineering processes supporting the business processes.

The four quadrants in the figure help to reason about the value of customer processes and tools. The most important are the core quadrants as these define the customer itself. Depending on the type of the customer’s industry, critical processes are found either in the 1st (service-oriented) or the 3rd quadrant (product-oriented). The context quadrants have the least value. Here customers often seek to outsource processes or purchase generic off-the-shelf solutions.

The following is a summary of MDE value for customers, based on my personal experiences in the four quadrants:

- Q4 is the hardest to sell. The reason for this is that here MDE provides a generic solution for a solution to a business problem. In other words, an unknown (and therefore regarded risky) MDE tool has to compete against multiple possible solutions that customer is more comfortable with. These include alternative mainstream technologies, in-house frameworks and run-time platforms, and even business changes that may cut ground from under the foot a tool vendor might have in the customer’s door. Warm reception by end users that love tinkering with their own ad hoc productivity solutions, is not guaranteed (not to mention their concern of becoming unneeded). Moreover, any MDE gains achieved in Q4 are likely to be watered down by waste in customer processes elsewhere. Industrial practices show that successful MDE application results in maximum 30%-40% faster overall development. These gains are among the lowest in MDE (compare these with up to 10x acceleration reported in Q1). Moreover, these gains are further relatively reduced when contrasted against alternative solutions. Last but not least, value of the initial gains is the hardest to demonstrate (you may discover that your stakeholders are not even aware of real business problems). The same stakeholders have the weakest influence over budget. It really looks like odds are against MDE tools in this quadrant today. Still, organizations without core processes, e.g. startup customers or customers developing one of a kind systems, may be interested in MDE tools here.

- Q2: MDE tools are typically not found here.

- Q3: This is the place of unique processes and numerous stakeholders from various domains: engineering disciplines (software development being one of them), architecturing, system design to name a few examples. Here MDE tools together with process optimizations can produce dramatic overall improvements (especially in product-oriented industries), which can be measured and linked to business needs recognizable by the customer. However, processes here are often knowledge-intensive and free-form, which makes the road towards these improvements longer and more complex compared to Q1, where processes are more structured and information-intensive. Off-the-shelf fixed MDE solutions are at disadvanage here.

- Q1 is the easiest for customer acquisition. Tool vendors can produce proofs of concepts in really short sprints (typically up to two weeks), deliver never seen before results (e.g. up to 10x faster development), directly relate these results to real business needs (usually falling under the business agility umbrella, but also including the more common T2M and cost reduction benefits) and have the shortest lines to budget holders. It is no wonder that vendors in this quadrant report numerous successes and are even able to grow under currently declining economic conditions.

So what kind of MDE tools are found in these four quadrants? Figure 1 illustrates my attempt to classify the tools. (This classification provides sufficient coverage and is by no means exhaustive.) The categories are:

- CIM tools are fixed business modelers with interpretation or code generation capabilities. Modifier CIM (Computation Independent Model) stresses that these tools focus on business solutions and isolate users from developing source code. Well-known examples come from Mendix and OutSystems.

- OTS are off-the-shelf applications that support generic business processes. Examples include CRM, ERP, Office and Simulation packages.

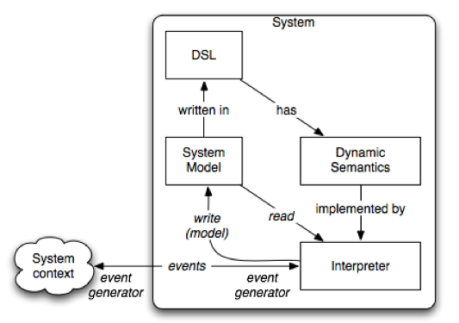

- DSM tools are language workbenches that enable customers define their own domain-specific modeling (DSM) and transformation processes. Typical tools in this group are MetaEdit+, Obeo Designer, oAW, AToM3, etc.. Note that while application of DSL tools in quadrants 1 and 2 is not unimaginable due to adaptability of such tools, there is not much “meta-” variability and too much integration complexity to justify such application.

- PIM tools are fixed UML modeling tools with (generic) code generators for one or more software technology platforms (e.g. .NET, Java, PHP). Modifier PIM (platform-independent model) indicates that users are at best isolated from details and differences of the platforms. Numerous examples can be found be found here.

Since I’ve mentioned the CHARTER project, it would be natural to place its tool-chain (aka CTC) in Figure 1 as well, especially because it presents an exception to the above experiences. CTC provides support for Java source code verification, testing and compilation, which are generic processes, thus placing CTC among PIM tools in Q4. However, due to restrictions that safety critical systems impose on those processes, there are no alternatives currently available. This coupled with huge error costs in such systems, significantly increases the tool-chain’s value for its potential customers.

Conclusion

In conclusion, I agree with Jordi that the cost of customer acquisition is high. However the situation is not the same for all MDE tool vendors. Those behind CIM tools, enjoy impressive interest from business customers. Their successes are responsible for the current breakthrough in popularity of MDE. Life is the most difficult for PIM tool vendors as too many factors may work against their tools in customer context. Moreover, they face competition from more versatile DSM tools. Last but not least, DSM tools can provide impressive benefits in Q3 (especially in product-oriented industries), but require that customer first commits to learning its own often knowledge-intensive processes. In my opinion, this quadrant and DSM tools are the stage for the next MDE breakthrough.

A necessary disclaimer is that the above conclusions are based on my own limited experiences. If you are an MDE tool vendor, customer or MDE professional, I would love to hear your opinion!