While effort reduction and quality increase are both commonly recognized benefits of MDE, the former particularly has become its trademark, thanks to numerous generative uses in model-driven software development (MDSD). Examples include generation of code and configurations from models written in UML, DSLs and XML.

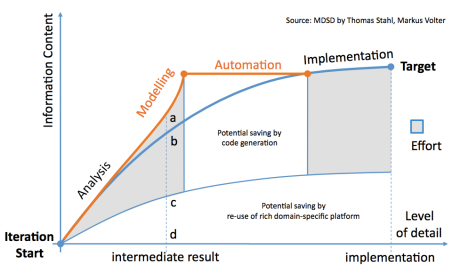

Figure: The effort in MDE approach with partial manual coding (adapted from [1])

The generative MDE automates well defined routine activities. An effective metric of depicting economical benefit thereof is effort. The above figure illustrates effort reduction due to automation and reuse in an MDE approach with partial manual coding. Of cause, the generative MDE improves quality as well: error reduction, enforced architecture conformance, and up-to-date documentation are common factors that have positive effect on software quality. But usually these are considered as icing on the cake that is effort reduction. In my experience this perspective on the economical value of MDE is common among both customers and MDE professionals. The perspective can be summarized as “the same with less”.

More with the same

Recently a client tasked me together with its domain experts to assess benefits of applying MDE to a difficult process within the organization. Having analyzed the before and after situations, we came up with estimated economical benefit expressed in effort savings. The estimate was hard to quantify, but “should” have been OK. Although I wanted to share this optimism, I felt that in practice the effort saving would be negligible if not even negative. This paradox was due to the fact that the largest activity in the problem domain was inherently creative and exploratory.

In the figure, the output of a single exploration in this activity is shown as intermediate result, corresponding to line ad. As the figure suggests, code generation directly from the output is not possible (this happens further downstream in development). You may have noticed that the modelling curve rises more steeply towards point a. This rise occurs because modelling requires increased level of domain understanding and more information is needed by semantically rich operations, such as simulation, verification, code generation (eventually), etc. On the other hand, the figure shows effort reduction indicated by distance cd, which is the result of providing end users with proper abstractions, faster access to right knowledge, separation of concerns, DRY modelling, maintained consistency and integrity.

While working efficiency per exploration is likely to increase (compare ab and cd), the leading concern is quality of the output. Here benefits are early detection of design errors, deep exploration of design choices, better communication and documentation, maximized reuse of domain-specific platforms in further development. Moreover, the domain experts noted that any saved effort would be re-invested in more alternative explorations in search of a more optimal output. This increased number of explorations, is likely to balance out any savings due to higher efficiency.

With these insights, economical benefits were expressed with quality metrics and linked to different business goals than initially thought.

Conclusion

The described MDE assessment targets a highly creative engineering activity that explores alternative choices. In extreme case, the main benefit is not effort reduction, but increased product and process quality. The icing on the cake is that processable models can open opportunities for generative uses as well.

In my experience, such and certainly less extreme quality-driven cases are not exotic. In recent years, quite a few MDE projects I’ve participated in, had benefits strongly linked to quality improvement. What are your MDE experiences with creative activities? What were the economical benefits and how were they conveyed?

References

[1] Thomas Stahl, Markus Voelter, Krzysztof Czarnecki. “Model-Driven Software Development: Technology, Engineering, Management”. Wiley; 1 edition (May 19, 2006)