AToM3 is a language workbench developed at the Modelling, Simulation and Design Lab (MSDL) in the School of Computer Science of McGill University. Please note that the reviewed version is not the latest (0.3).

The focus of the review is the language workbench capabilities, that is everything related to specification of modeling languages and automated processing of models.

Freeform Multilingual Modeling

In AToM3, models (and metamodels) are visually described as graphs. There is no support for spatial relationships, such as containment or touch. While position of modeling elements may seem to imply spatial relationships among them (e.g. among a software component and a port), AToM3 does not recognize, maintain or process such relationships.

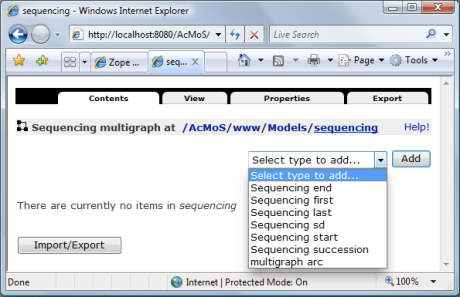

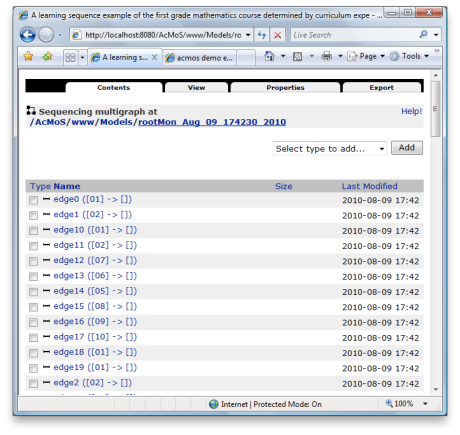

Modeling is performed by means of a visual editor: one selects a modeling concept from one of possibly multiple language toolbars (to the left of the canvas) and places (instantiates) it on the canvas. Any language toolbar can be easily removed or added by closing or opening its language-specification file. Furthermore, the toolbar itself is defined by a model in a so-called “Buttons” DSL (see Figure 1). At any time, the modeler is free to edit this model to e.g. arrange buttons in one or multiple rows, remove language concepts or specify additional buttons to launch transformations frequently used with the given language. Both language specification and toolbar files are generated by AToM3 from the language model (aka metamodel). Language independent tools like Edit, Connect, Delete form the general modeling toolbar (above the canvas).

Figure 1: A “Buttons” model for a DSL

A special feature of AToM3 is a freeform multi-language canvas. AToM3 breaks with the tradition of “strongly typed” diagrams that prevent intermixing modeling elements if not explicitly allowed by the diagram’s metamodel type. AToM3 canvas can be considered a diagram that allows any modeling elements. However, elements can only be connected if their metamodel allows this. Such canvas provides users with a high degree of modeling freedom. (As illustration of this freedom, AToM3 logo itself is a freeform model done using 5-6 DSLs). Furthermore, because models are not fragmented among islands of diagrams, information access is optimal. Another benefit is less effort on the metadeveloper’s part because a freeform model can be handled by a transformation without the prior need of metamodel integration.

Unfortunately as models grow in size and number, the single canvas does not scale well, nor does AToM3 provide the user with means to manage them.

AToM3 uses this editor and the freeform canvas in a few different contexts. The primary role is a modeling editor, however the same editor is used for metamodeling and specifying transformation definitions. Such reuse reduces the learning curve and more importantly, brings the benefits of a domain specific modeling environment and the freeform canvas to metadevelopers as well.

Language Specification

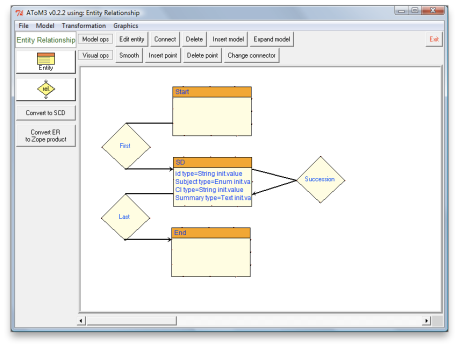

AToM3’s metalanguage is based on the Entity Relationship (ER) formalism. In order to provide complete metamodeling capabilities, concepts Entity and Relationship are extended with constraints and appearance properties (see Figure 2). Property constraints is used to define static semantics. Appearance defines visual presentation or concrete syntax of a language concept.

Figure 2: Features of Entity or Relationship. Appearance editor

AToM3 provides overall excellent metamodeling capabilities that enable metadevelopers produce level 5 quality metamodels. The following details these capabilities.

Abstract Syntax

For this task metadevelopers are equipped with the ER-based metalanguage, which is very close to conceptual modeling techniques, such as ORM. This means that there is a minimum gap between conceptual, business world-oriented models and AToM3 metamodels. In fact, AToM3 abstract syntax models are surprisingly simple and void of technical details typical for metamodels, which makes the models very readable by subject experts. Figures 2 and 4 of the Curriculum Content Sequencing (CCS) demo illustrate this point.

Concrete Syntax

A simple but sufficient editor allows to define a vector presentation for a language concept. Figure 2 shows all that the editor has to offer.

Static Semantics

The constraints property contains rules that control how a modeling element can be connected to another element to form a meaningful composition. Such rules can be defined per language concept or a model and triggered by editor events (e.g. edit, save, transformation start) or on demand by user, thus covering all imaginable ways to invoke model checking.

AToM3 constraint language is Python, which is an unusual choice. Indeed, Python is not a constraint language, not formal (in the model-driven sense), and has side effects (AToM3 is written in Python too). However, my experience with AToM3 showed that none of those are real disadvantages in practice: Python is known for a concise and easy to read syntax and as constraint language, is intended for metadevelopers (who know how to deal with side-effects). In this role, Python proved to be powerful, flexible and efficient.

Dynamic Semantics

AToM3 uses a common approach to define DSL semantics by translating language concepts to concepts in another target domain with predefined dynamic semantics (e.g, C++, Java). This approach is known as translational.

Another less common approach supported by AToM3, is by modeling the operational behavior of language concepts [1]. The operational semantics approach specifies how models can be directly executed, typically by an interpreter. Such specifications are expressed in terms of operations on the language itself, which is in contrast to translating the language into another form. The advantage is that operational semantics are easier to understand and write. The disadvantage is that interpreters are normally not available for DSLs due to the very specific nature of the latter. (For an AToM3 illustration of how to build a custom interpreter in a model-driven way, please refer to this article.)

In AToM3 translational and operational approaches are implemented as transformations.

Transformation

AToM3 employes the graph rewriting approach to transform models. Transformations themselves are declaratively expressed as graph-grammar models. My experience with transformation models written in imperative languages (e.g. QVT Operational, MERL) is that more time is spent figuring out how to navigate host model structure to access right information than actually specifying what to do with this information. Declarative approach like that of AToM3, frees the metadeveloper from having to specify navigation, thus drastically reducing complexity of transformation modeling.

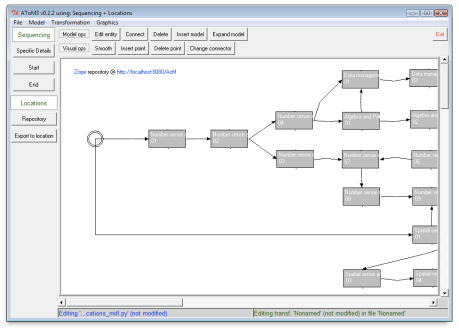

Figure 3: A GG transformation model, a rule, an LHS and an element’s properties

To define a transformation in AToM3, one needs to create a graph grammar and specify one of more GG rules. Figure 3 shows a GG model for the export transformation in the CCS demo. Each rule specifies how a (sub)graph of a so-called host graph can be replaced by another (sub)graph. These (sub)graphs are respectively called the left-hand side (LHS) and the right-hand side (RHS). A rule is assigned an order (priority), a condition and an action. In AToM3, conditions and actions are programmed in Python. As in the case with the constraint language, Python performs very well in these roles too.

A special feature of AToM3 is that both LHS and RHS can be modeled with the DSL(s) of the host graph. In fact, the (sub)graph editor is based on the above mentioned model editor, and provides the metadeveloper with the freeform multilingual canvas, customizable language toolbars and transformations. The consequence is that it is very easy to construct sub-graphs and verify them with subject experts.

Figure 4: A host model together with a “parameter” model

An interesting feature of AToM3 transformation system is that it does not feature transformation parameters. This may seem limiting, however an equally effective alternative is to store “parameter” information in a model. The AToM3 canvas makes it extremely easy to mix such “parameter” model(s) with a host model and pass them to a transformation. Figure 4 shows a sequencing model from the CCS demo together with a repository model (top left corner of the canvas). Given both, an export transformation can access the remote model repository, pass authentication, and store the sequencing model at the repository.

Another interesting feature is that transformation input can be also an element selected by users (unfortunately multiple selection does not work in this version). A promising application thereof is user-defined in-place transformations that automate frequent and routine modeling operations. For example, decomposing a group element into constituent objects (and vice-versa) with a click of a button. Industrial users that often work with large models would really appreciate the resulting reduction of repetitive strain.

Finally, AToM3 supports nearly all transformation kinds known to the author [2, 3]. It is easier to list what is unsupported: text-to-model and text-to-text (which is a consequence of the graphical nature of the language workbench), and the more exotic synchronization and bidirectional kinds. Due to its graph rewriting system, AToM3 is very strong in model-to-model (M2M) and model-to-text (M2T) transformations. A GG-based support for the latter, very popular category, is not obvious and therefore warrants an extra explanation.

M2T Transformation

In AToM3 M2T means producing textual structures from graph structures. One way of doing this is via a transformation where the source and the target models are the same. Rules of such transformation do not perform any important rewriting, but use the graphical nature of the source language to traverse and annotate the source model with temporary information that is needed for text generation. Text itself is generated by side-effects encoded in actions of rules, which can access the annotations.

A typical M2T application is code generation. An example of a non trivial code generation made with AToM3 is ZCase, a software factory for Zope. In the CCS demo, ZCase is a part of the ER→Zope transformation chain.

Conclusion

The is no escaping the fact that AToM3 is a research tool and is not suitable for demanding industrial use. The workbench does not scale well for large models (both in terms of performance and user controls) and its tools are basic. There is no reliable support, no up-to-date exhaustive documentation, no collaborative development, no integration with version control and requirement management systems, and naturally plenty of bugs and annoyances. In short, the tool is far from being mature and ready for industrial users.

However, metadevelopers may find the above drawbacks quite tolerable, because they are better prepared to deal with technical issues and metamodels typically do not stress the tool’s scalability. On the positive side, AToM3 provides simple but optimal tools and set of features that work together to create one of the most robust and powerful language workbenches I know. Thereby AToM3 is extremely suitable for agile, responsive and timely development. Due to the maturity level of the workbench, its application is best limited to proofs of concept. To date, AToM3 is the language workbench of my choice for quick prototyping.

AToM3 is recommended to MDE students, analysts in need of quick prototyping and tool vendors seeking to improve their language workbenches. In my opinion, AToM3’s metamodeling and transformation technology is nearly optimal, and is still ahead of the larger and more inert commercial workbenches. While its problems are numerous, they are run-of-the-mill and knowledge and technologies to address them are commonly available. If these problems could have been removed, then AToM3 would have been the tool I could have easily recommended to industrial customers too.

References

[1] Tony Clark, Andy Evans, Paul Sammut, and James Willans. Applied Metamodelling: A foundation for Language Driven Development. Version 0.1. Xactium Ltd., 2004.

[2] Krzysztof Czarnecki and Simon Helsen. Classification of model transformation ap- proaches. In Jorn Bettin, Ghica van Emde Boas, Aditya Agrawal, Ed Willink, and Jean Bezivin, editors, 2nd OOPSLA Workshop on Generative Techniques in the Context of Model-Driven Architecture, Anaheim, CA, October 2003. ACM Press.

[3] Tom Mens, Krzysztof Czarnecki, and Pieter Van Gorp. Discussion – a taxonomy of model transformations. In Jean Bezivin and Reiko Heckel, editors, Language Engineering for Model-Driven Software Development, volume 04101 of Dagstuhl Seminar Proceedings, Dagstuhl, Germany, 2005. Internationales Begegnungs- und Forschungszentrum fuer Informatik (IBFI), Schloss Dagstuhl, Germany.